贴吧爬虫

import requests

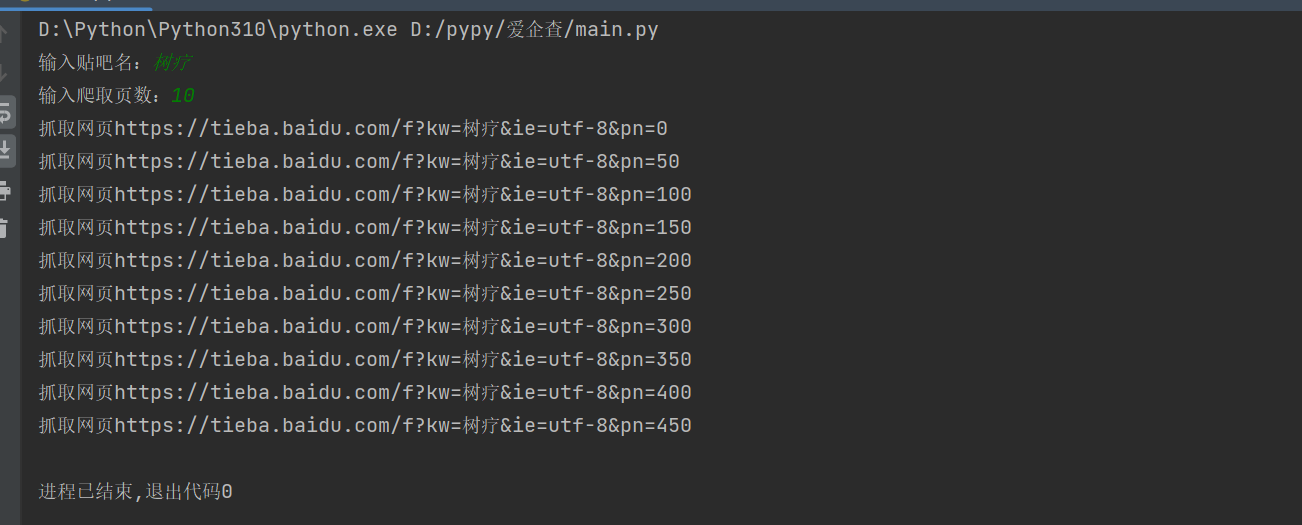

tieba_name = input("输入贴吧名:")

class spider:

def tieba_spider(self, tieba_name): # 传入参数(贴吧名),定义临时URL和headers

self.tieba_name = tieba_name

self.url_temp = "https://tieba.baidu.com/f?kw=" + tieba_name + "&ie=utf-8&pn={}"

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36"}

def url_list(self): # 遍历需要爬的page地址列表,生成url_list列表,然后将数据返回出去

page = int(input("输入爬取页数:"))

# url_list = []

# for i in range(page):

# url_list.append(self.url_temp.format(i * 50)) # append添加临时url地址,foramt往后面预留大括号添加页码

# return url_list

return [self.url_temp.format(i*50) for i in range(page)]

def get(self, url): # 发送请求,获取响应

print(f"抓取网页{url}")

response = requests.get(url, headers=self.headers)

return response.content.decode() # 返回请求,将返回的数据字符串转换

def save(self, html_str, page_num): # 保存网页文件

file_page = "{}吧第{}页.html".format(self.tieba_name, page_num)

with open(file_page, "w", encoding="utf-8") as f:

f.write(html_str)

def run(self): # 运行逻辑

# 构造url列表

page_list = self.url_list()

# 遍历 发送请求,获取响应

for url in page_list:

html_str = self.get(url)

# 保存

page_num = page_list.index(url) + 1

self.save(html_str, page_num)

run = spider()

run.tieba_spider(tieba_name)

run.run()